In its annual report, the AI Now Institute, an interdisciplinary research center studying the societal implications of artificial intelligence, called for a ban on technology designed to recognize people’s emotions in certain cases. Specifically, the researchers said affect recognition technology, also called emotion recognition technology, should not be used in decisions that “impact people’s lives and access to opportunities,” such as hiring decisions or pain assessments, because it is not sufficiently accurate and can lead to biased decisions.

Outgrowth of facial recognition

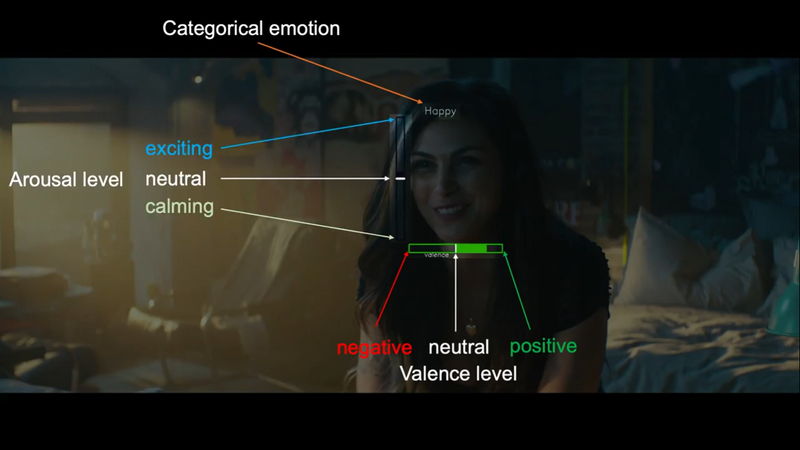

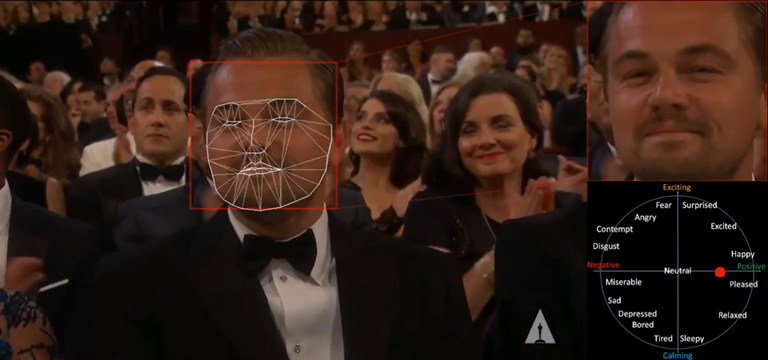

Researchers have been actively working on computer vision algorithms that can determine the emotions and intent of humans, along with making other inferences, for at least a decade. Facial expression analysis has been around since at least 2003. Computers have been able to understand emotion even longer. This latest technology relies on the data-centric techniques known as “machine learning,” algorithms that process data to “learn” how to make decisions, to accomplish even more accurate affect recognition.

The challenge of reading emotions

The challenge of reading emotions

Researchers are always looking to do new things by building on what has been done before. Emotion recognition is enticing because, somehow, we as humans can accomplish this relatively well from even an early age, and yet capably replicating that human skill using computer vision is still challenging.

While it’s possible to do some pretty remarkable things with images, such as stylize a photo to make it look as if it were drawn by a famous artist and even create photo-realistic faces – not to mention create so-called deepfakes – the ability to infer properties such as human emotions from a real image has always been of interest for researchers. Emotions are difficult because they tend to depend on context.

While it’s possible to do some pretty remarkable things with images, such as stylize a photo to make it look as if it were drawn by a famous artist and even create photo-realistic faces – not to mention create so-called deepfakes – the ability to infer properties such as human emotions from a real image has always been of interest for researchers. Emotions are difficult because they tend to depend on context.

For instance, when someone is concentrating on something it might appear that they’re simply thinking. Facial recognition has come a long way using machine learning, but identifying a person’s emotional state based purely on looking at a person’s face is missing key information. Emotions are expressed not only through a person’s expression but also where they are and what they’re doing. These contextual cues are difficult to feed into even modern machine learning algorithms. To address this, there are active efforts to augment artificial intelligence techniques to consider context, not just for emotion recognition but all kinds of applications.